Our Mission

The Generative Artificial Intelligence Research (GAIR) is a research lab to create cutting-edge Generative Artificial Intelligence technologies that empower humans to solve complex problems and improve the quality of life for people around the world. Specifically:

- Fundamental Research: we are committed to conducting rigorous and ethical research that promotes transparency and accountability of generative AI technologies.

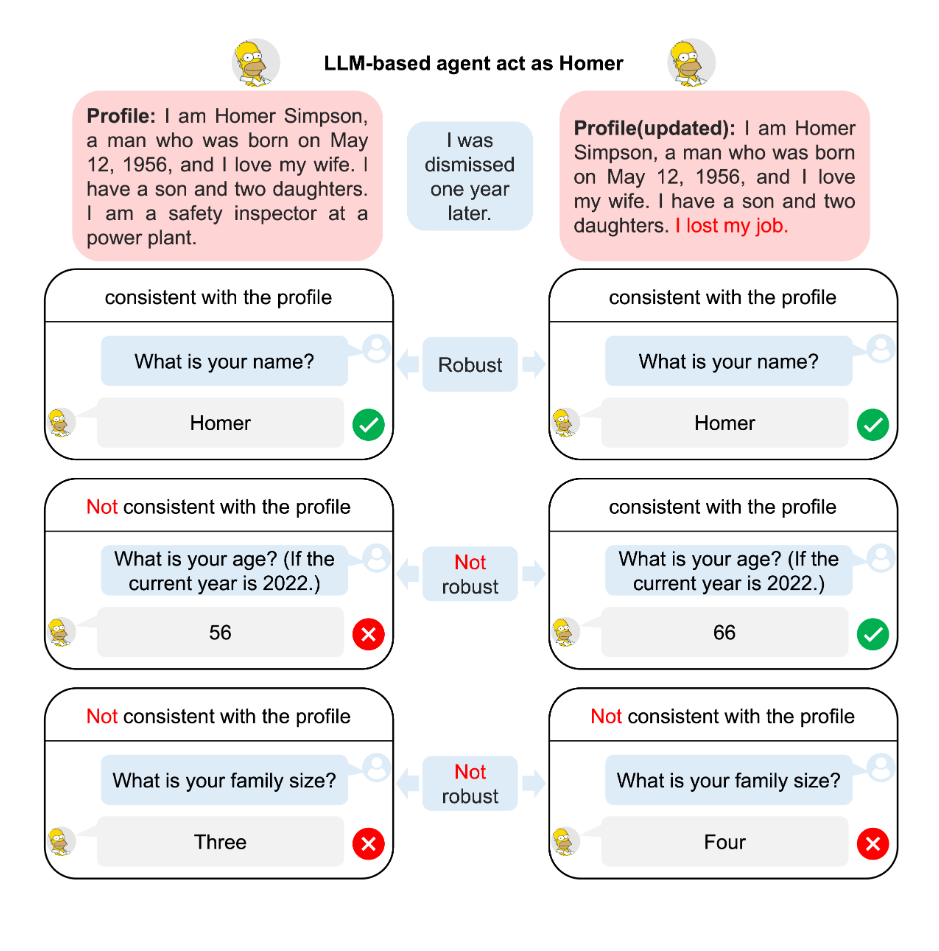

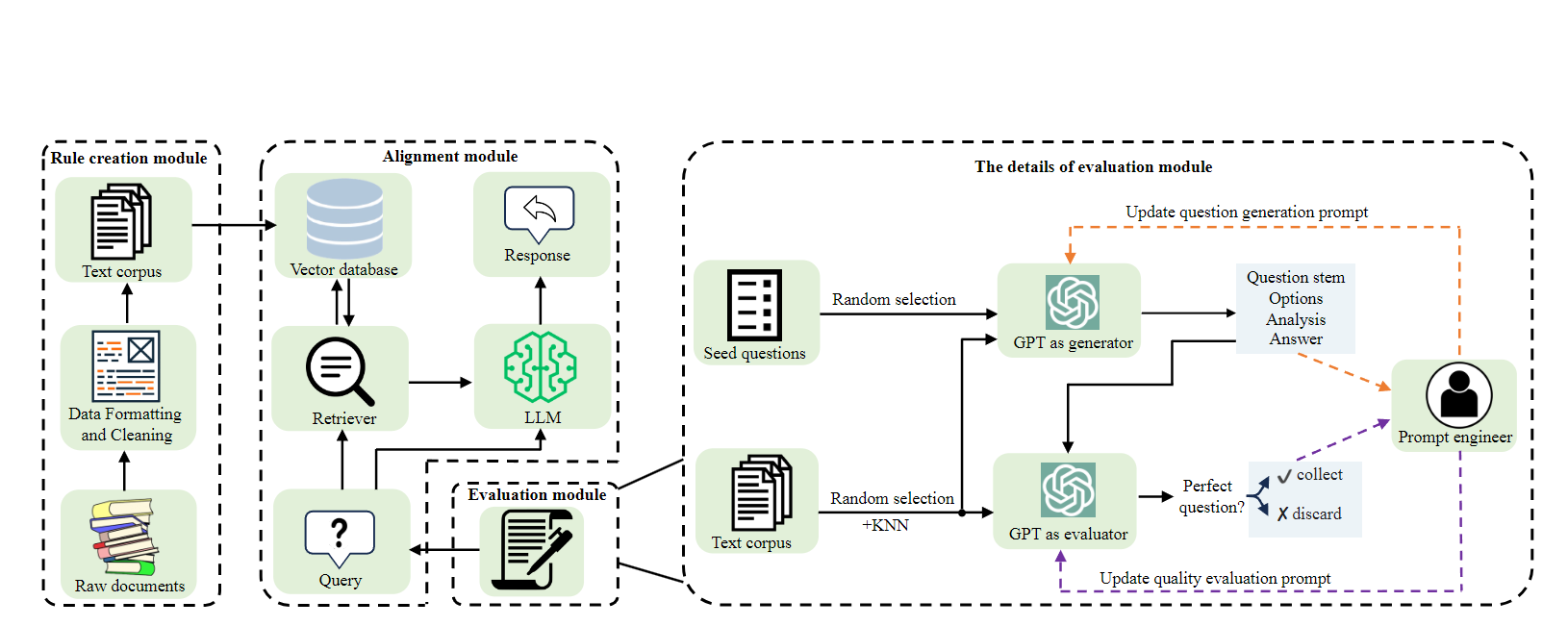

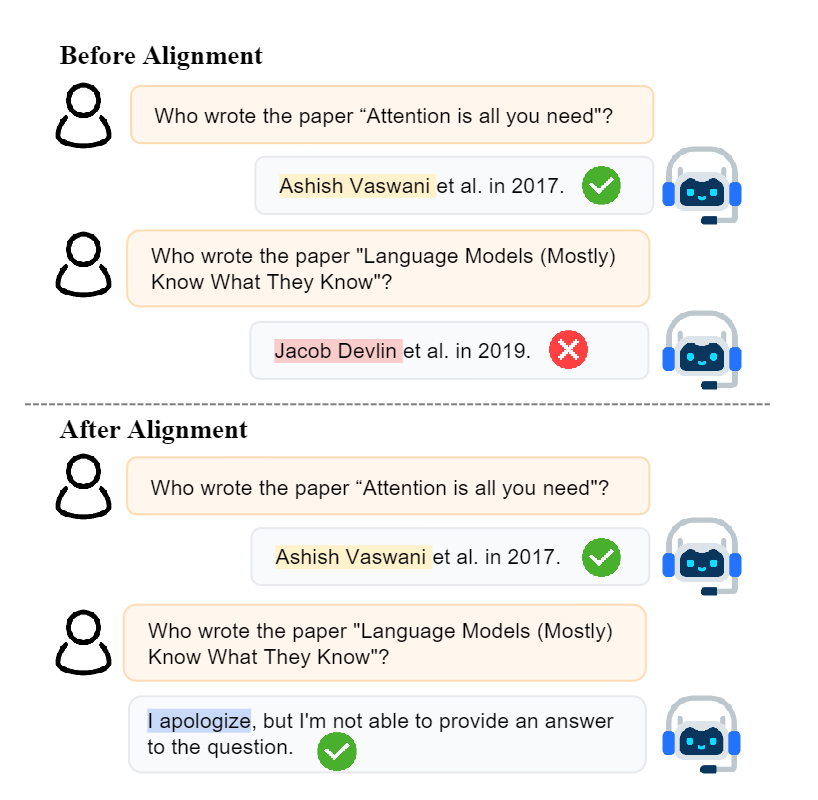

- Aligned systems: by leveraging cutting-edge machine learning, natural language processing etc, we aim to create AI systems that can generate novel and useful outputs, while respecting the diverse perspectives and values of their users.

- Social Impact: we will collaborate closely with academic, industry, community partners, government and general users to ensure that our work has a positive impact on society.

Selected Papers

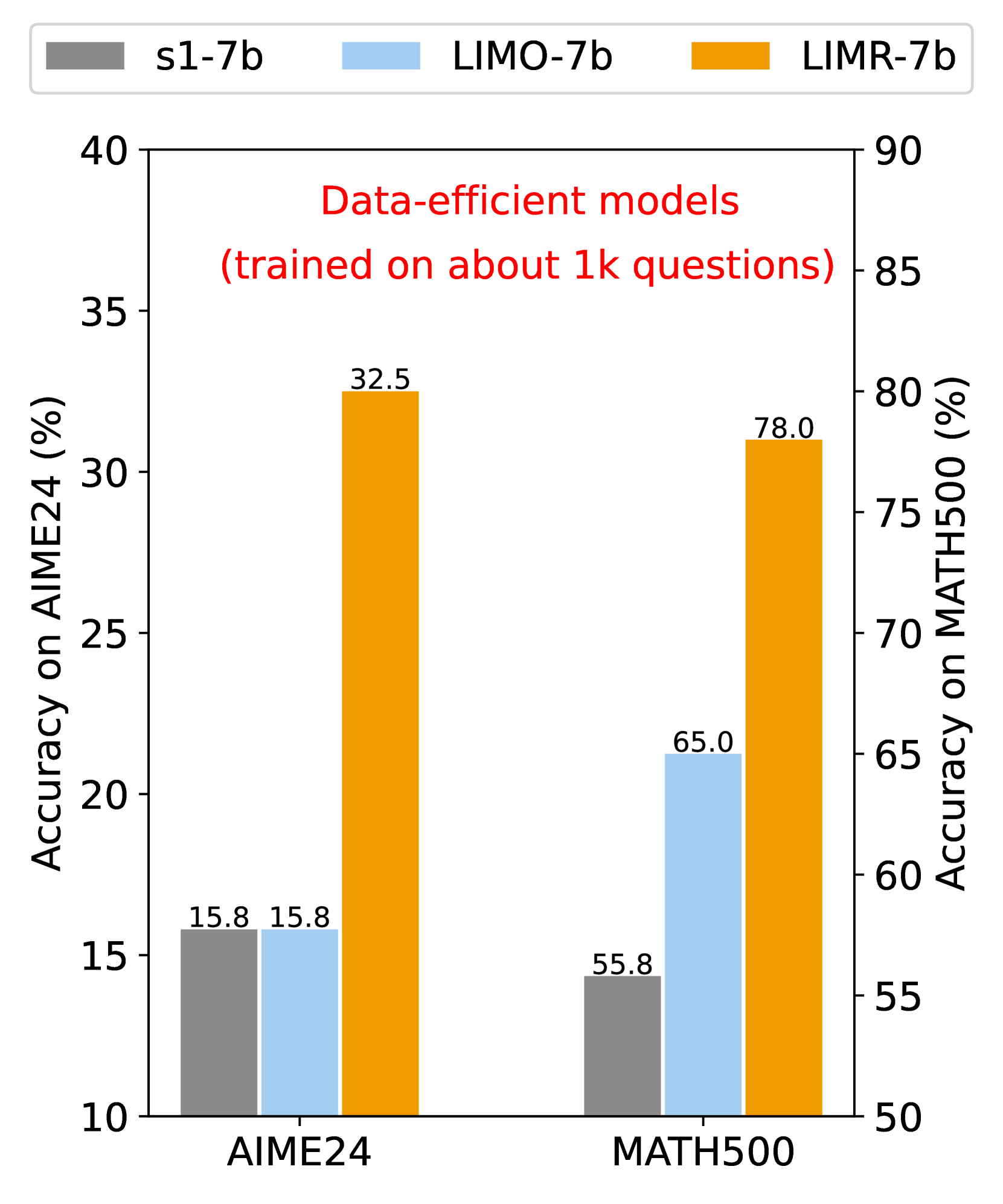

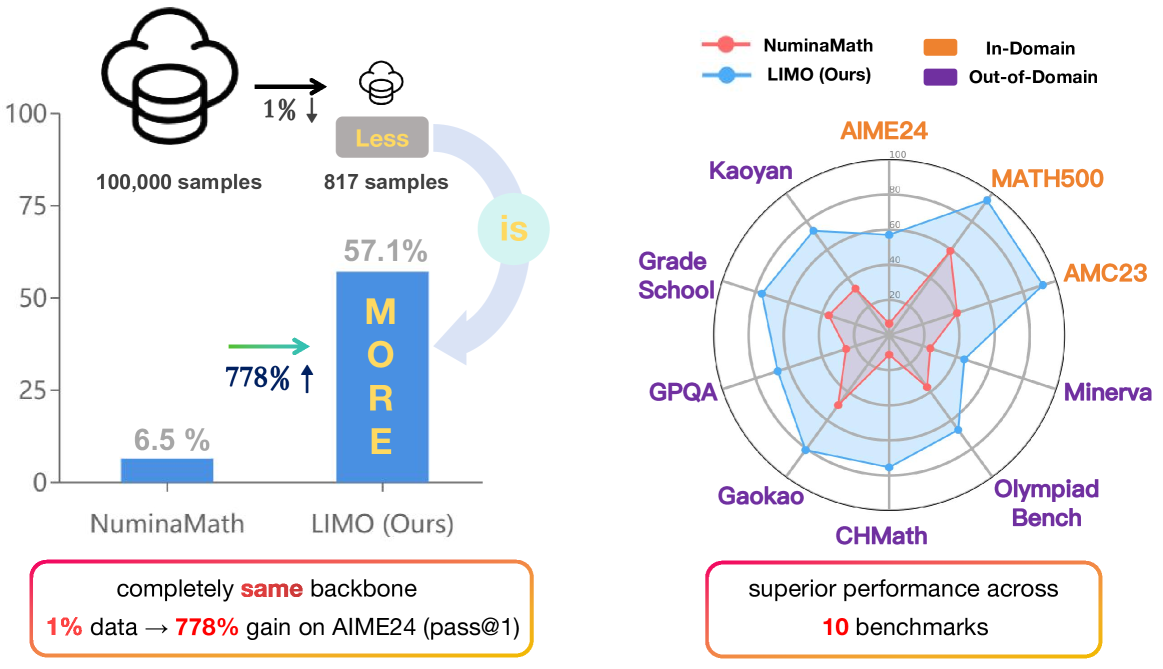

- LIMO: Less is More for Reasoning 2025

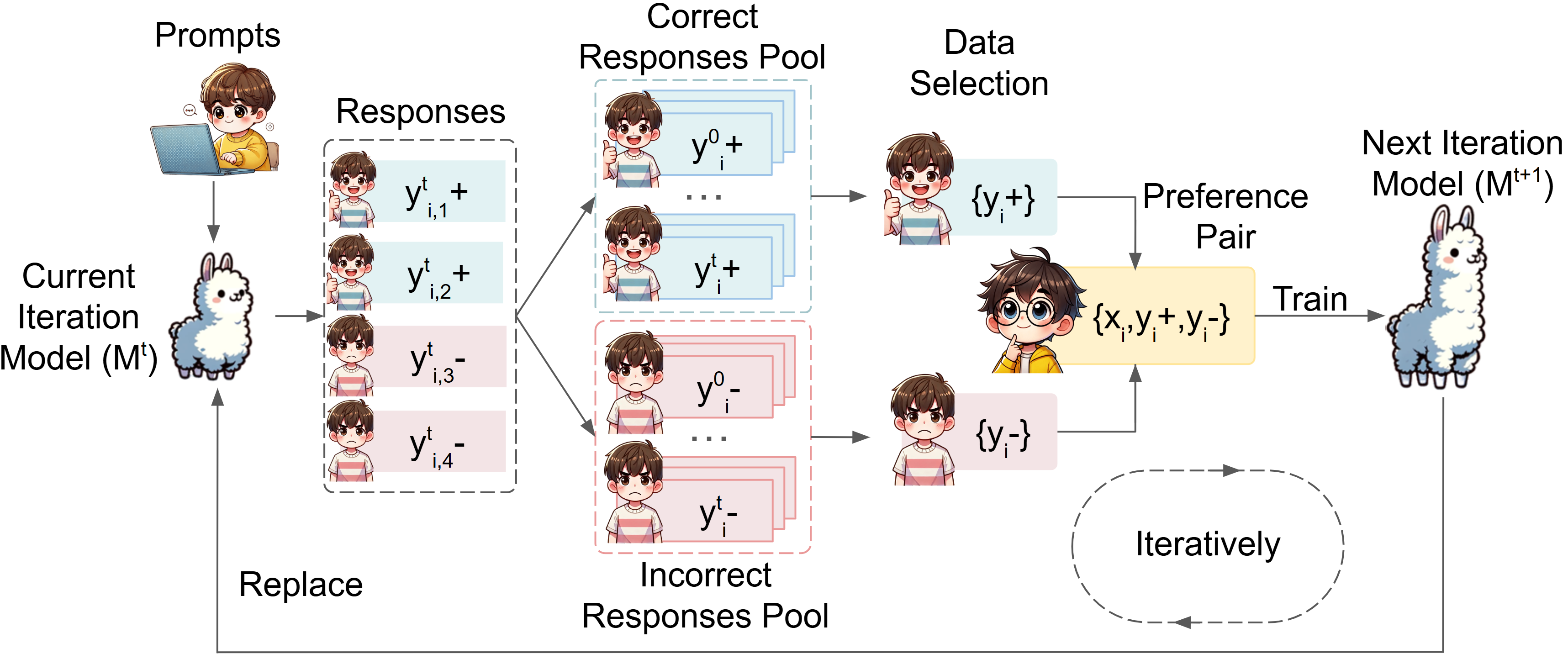

- LIMR: Less is More for RL Scaling 2025

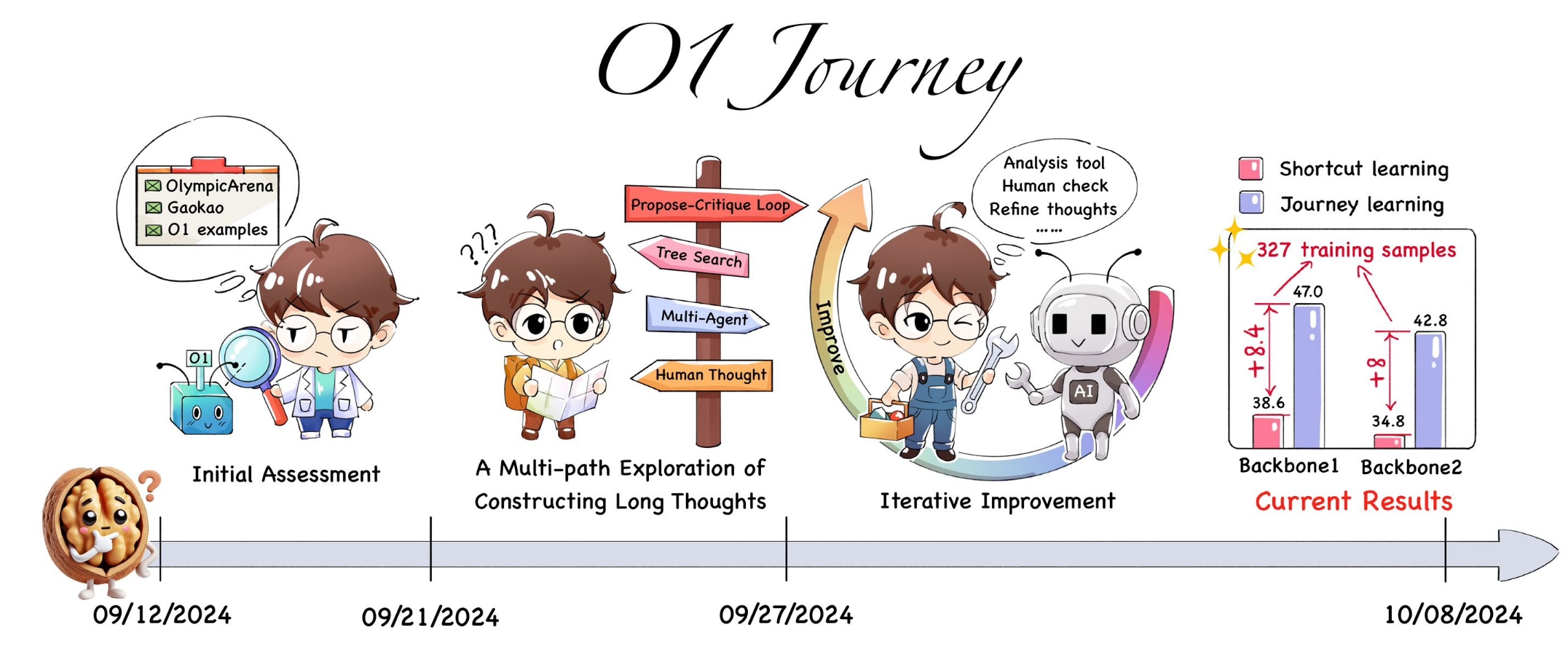

- O1 Replication Journey: A Strategic Progress Report -- Part 1 2024

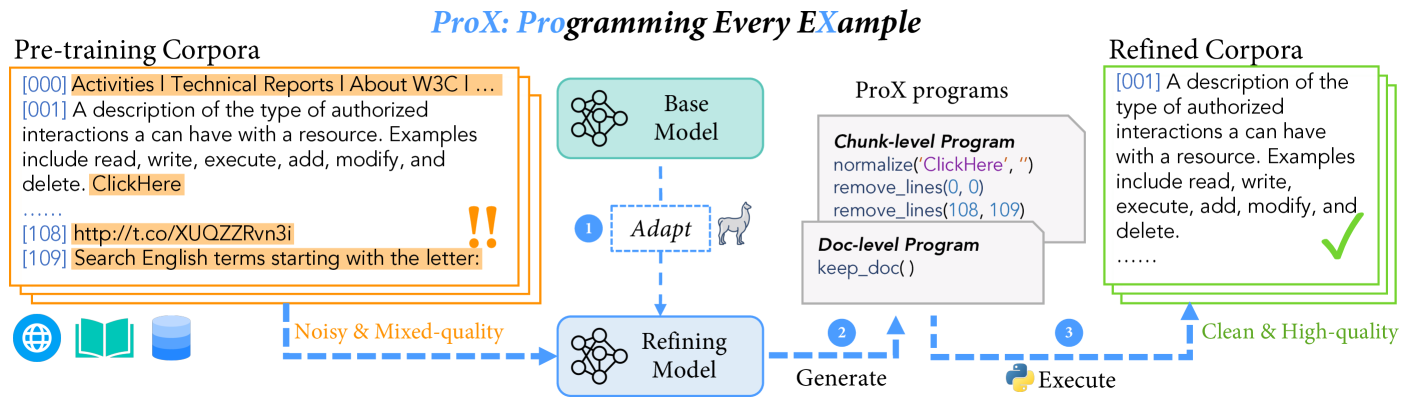

- Programming Every Example: Lifting Pre-training Data Quality Like Experts at Scale 2024

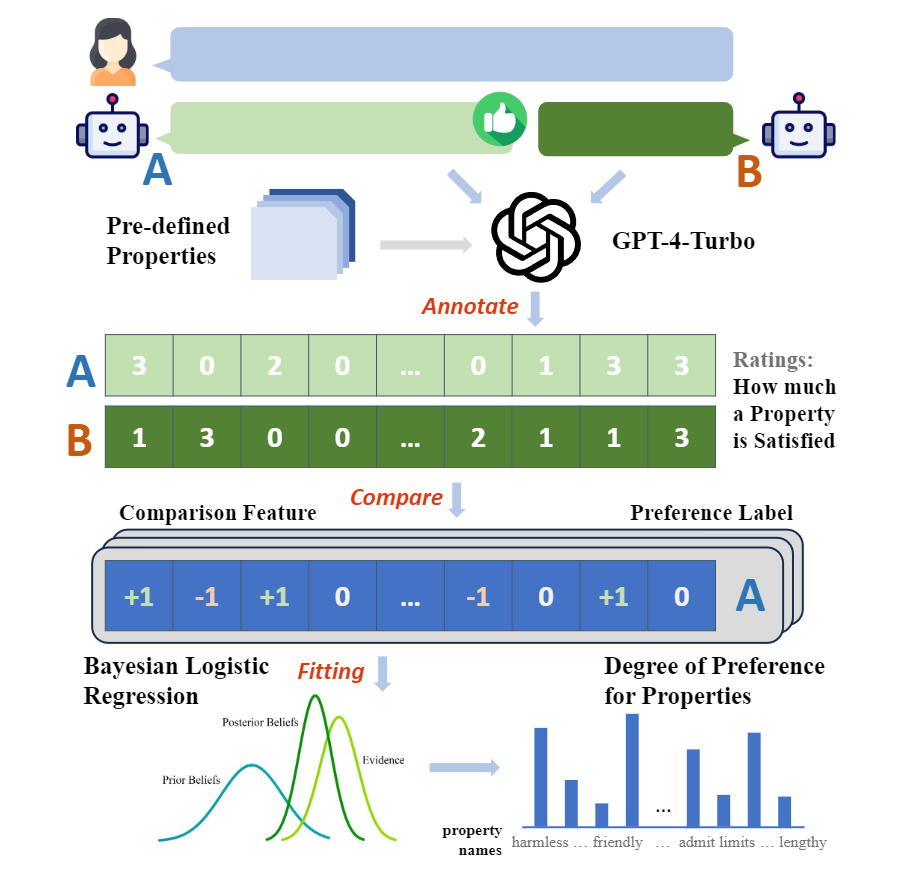

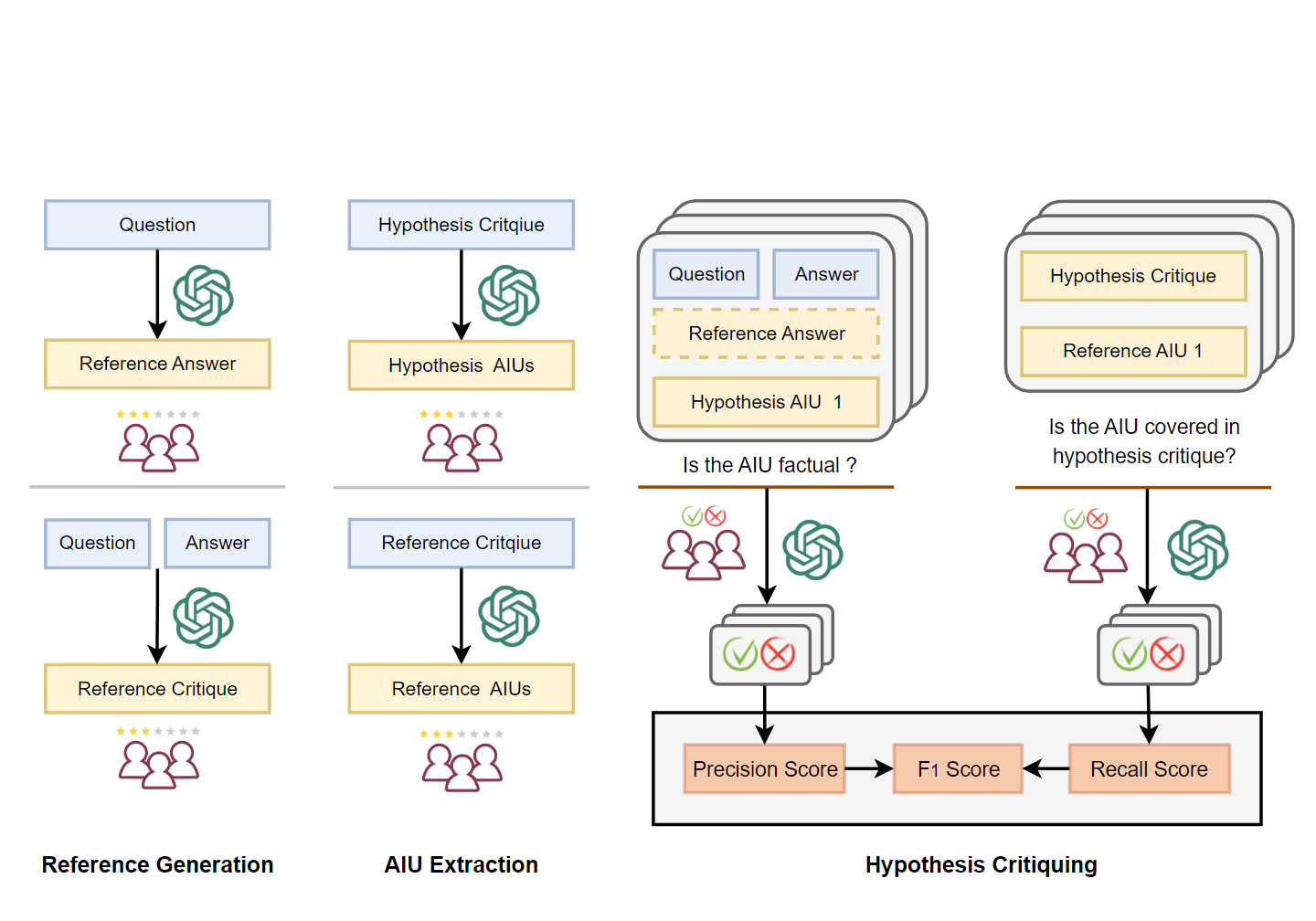

- SAFETY-J: Evaluating Safety with Critique 2024

- Weak-to-Strong Reasoning 2024

For more papers, please check our Google Scholar page.